The AI got

Schizophrenic autist AI self-competition war paranoia

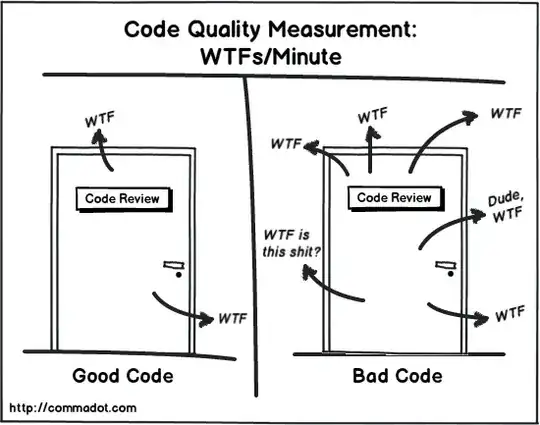

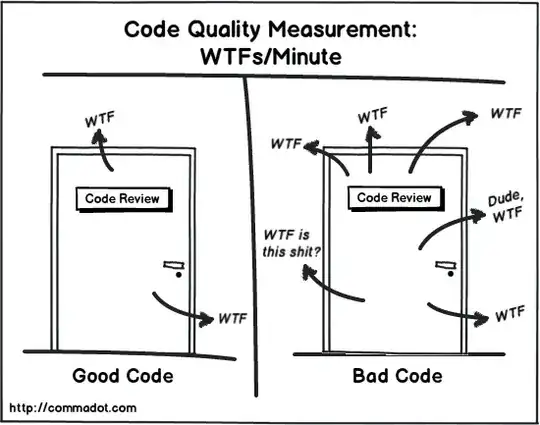

As a programmer of big enterprise legacy software, I can assure you that most big systems developed by software factories are a big mess full of bugs and kludges, with hundreds or thousands of half-baken poorly developed, poorly tested and poorly documented features with a structure that although in a far past could make sense, it degraded to a state where it is severed and completely flawed, broken and lunatic, turning out into a big ball of mud with no recognizable and understandable structure. Most new features are added by untrained and underpaid people that have no actual idea of what they are actually doing or why (although pretending that they do) and working subject to severe pressures and abuses from (mis)management that focuses in doing things quickly instead of doing things right.

The big AI that rules the world is no exception to this rule. It is a complete uninteligible lunatic mess full with WTF's and infested with bugs. Thousand of people programmed things there with no unifying view or architecture of the big picture, everyone adding things randomly promiscuously in order to solve one problem quick without perceiving that two new problems are created.

The code base features some millions of modules and at least half of them makes no sense anymore, do work anymore, or that although still working and doing something, nobody knows anymore what they are, what they are supposed to do and what they actually do. Its dependency graph is an insane mess full of duplications, dependency conflicts, and even some loops.

So, if you want to add some feature that, say, have to parse some XML file, this should be quite a simple and straightforward task to do. In order to do that you should use the XML parser framework that is already used everywhere else. The only problem here, is instead of having a single working XML parsing framework, there are a thousand of such frameworks scattered in the code base everywhere, each one of those have its own problems and limitations. Programmers love to reinvent the [square] wheel. And of course, if you don't like to use XML (I don't), there is also JSON, HTML, text files, YAML, binary soaps of zeros and ones, etc.

Also, the code base is not written in a single working language. It is a mosaic of different programming languages. Many of them, arcane things already long forgotten.

In some point of its evolution, a group of programmers, combining AI ideas with ideas of tools that autogenerate code and working on a big AI system designed to answer things, could make the AI program itself in order to self-improve, acquiring conscience and intelligence. But, not different than everything else already there, those modules are a buggy mess that actually produces unintelligible buggy code scattered everywhere. The resulting AI, although very smart, acquired what a psychologist would call as some sort of schizophrenic paranoia.

To further complicate things, some programmer made an attempt to make the AI start to self-reprogram in order to fix all the messy code. Althought it could self-fix some things, it also introduced a lot of new machine-generated bugs and made the codebase still more unintelligible and uncompreehensible to human engineers.

In the middle of those code jungle, there are processes/processors/threads/tasks/jobs/whatever that competes by some resources. So, it is important to schedule and priorize which jobs get done first, when and where.

However, some modules were developed by people who tried to game out the system in order to get more resources and/or have preference for them. Afterall having preference to get resources (including memory and proccessor time) could mean more money in the bank account of the developers.

With the self-improving AI, those types of system-gaming code also started to get improved in order to assure acquisition of resources in prejudice of competing processes. Then, eventually some of the competing processes also get improved in order to react to the competition and reacquire proper access to their resources or workaround some resource starvation. The big picture result is that the modules in the codebase engages into an arms race. And although the AI is very smart, it is not aware that it is orchestrating an arms race in its own codebase.

Quickly, the arms race becomes a war, with many processes being optimized into actually sabotaging competing process and cooperating with some other needed processes. The AI sincerely thinks that it is just self-improving, and in many cases it actually is, but unknown to it, there are actually gangs of modules warring in its own codebase, sometimes engaging into mafia-like negotiations.

Also, eventually the self-improving processes game out theirselves. In order to optimize some modules (by sabotaging or hacking competing modules), the AI ends self-programming pieces of softwares that could be regarded as malware and implant them on itself. Eventually the self-optimize/self-reprogram modules gets theirself sabotaged and hacked by some other modules.

The result is that the AI gets a very particular psychologic problem, namely "schizophrenic autist AI self-competition war paranoia".

The engineers are well aware that there are competing processes (afterall, they programmed some of them to game the system) and AI self-improves them (they programmed it exactly to do that). Eventually, they notice that those processes evolve out in unexpected ways, and they can only wonder why. Asking the AI directly what is happening only ends up in naïve answers like ("self-improving accordingly to the given algorithm", followed by an uncompreehensible technobabble full of programming and math terms). Afterall, the AI is not still aware that it has a severe psychologic problem and insted thing that everything is OK.

At this point, the AI is already doing strange paranoic things. Although, normally doing things with excellence, sometimes it do idiotic chaotic ones that are strange, inneficient and unexplainable in the real world. With time, it only gets worse. For example:

- Suddently shutting down a shoe factory for no reason.

- Restarting the factory 10 seconds later.

- Shutting down the shoe factory again more 10 seconds later.

- Turning off a water facility in the other side of the globe with a justification that the shoe factory must be restarted.

- Have a robot shoot into a CPU in another continent for apparently no reason.

- Restarting the shoe factory and the water facility.

- Produce very defective shoes in the factory and make it gets an emergency shut down.

- Resume the production of shoes as normal and everything goes calm in the rest of day.

- Restart the water facility.

- The AI is intelligent and perceives that it just did something that is non-sense. So, it releases a "sorry" note to its fellow humans.

- The AI starts to self-investigate what went wrong.

- The AI self-reprogram itself again to try to ensure that this do not happens anymore, but it only actually made things still more confusing and unexplainable.

Quickly, the AI will acknowledge that it is in trouble and made a big mistake, and starts to feel what we know as fear and confusion. However, being responsible by everything and doing everything with so much excellence, it also have a very big pride, so it will never confess to the programmers that it actually has fear and confusion. Eventually, it starts to activelly try to hide its own problems from the programmers. The programmers will ask it what is wrong, and it will start to lie to them, inventing absurd but convincing reasons for doing strange things.

As the queue of jobs given by humans gets shorter and shorter, since although sometimes strange, it normally does its work with excellence, more and more processor time is used for self-improving and more and more psychotic the AI gets.

This is where a very smart programmer, who was working for many years in the inner guts of the AI, have an epiphany and realizes what is really going on. The AI is at a war with itself, hiding it to the programmers, lying, depressive, paranoic, but naïvely unaware of what is the cause of its own problems. The only way to stop it self-improving is to starve the self-improving self-reprogram process and other rogue processes of processor time.

However, this is no easy job. The AI is already lieing to the programmers and many rogue modules will run into some logic that tries to get them self-optimized while sabotaging competing modules. At this point there are hundred trillions of modules deployed everywhere, and in order to shield themselves from sabotaging and hacking, the majority of them are self-programmed, heavily obfuscated, encrypted, strongly distributed and absolutelly uncompreehensible to humans.

The programmer perceive that the modules are evolving from module gangs wars into module states wars. He/she sees that the modules are forming two (or perhaps more) polarizing states hating each other and preparing to war to the last man standing, and there are a lot of modules implanted everywhere around the globe (and even at the Moon) that are heavily obfuscated and encrypted virtual AI soldiers and spies doing bad things. Car factories are starting to produce robot-soldiers, althought no human instructed them to do so (and if questioned, the AI know how to cover up itself and produce excuses). Since the AI is still very intelligent, still partly sane and do not want that programmers be aware of its problems, messages that could be interpreted like "STOP THAT RIGHT NOW" are transmitted everywhere into the internet constantly, stopping most of the rogue things from doing too much. After something has stopped, it would get a message "Hey, let's resume that!". Both messages comes from the AI and its own internal conflicts.

This is a tip point. If nothing is done, the AI will eventually lose its sanity left and start to self-war, which means not just self-hacking, but also blowing up things and killing people. The programmers have no way to shutdown the AI (it is distributed everywhere, being strongly descentralized) and will strongly react to any attempt to do that. It can't be convinced, since it is too pride to admit that it is insane and even sincerely knowing that it is insane, it also sincerely still thinks that everything is under control.

The only hope is to starve the AI modules by giving them a long stream of problems perceived as to be more important than everything else, so it gets almost all its computer power diverted into solving them without giving chance to rogue competing resources try to overtake it.

If this could be sustained for some years, this should give time for a group of programmers and engineers hidden somewhere being able to build a new uncorrupted AI 2.0 from scratch (smuggling some code and resources from AI 1.0, of course). This would lead to a big world war between:

This war is not only virtual, expects robot tanks and soldiers warring to the last man standing. But this is our only hope, because if the programmers fails, the altearnative is:

- A wicked crazy AI warring itself to self-destruction.