While a few of the lower ranked answers here touch on the truth of what an unlikely situation this is, they don't exactly explain it well. So I'm going to try to explain this a bit better:

An AI that is not already self-aware will never become self-aware.

To understand this, you first need to understand how machine learning works. When you create a machine learning system, you create a data structure of values that each represent the successfulness of various behaviors. Then each one of those values is given an algorithm for determining how to evaluate if a process was successful or not, successful behaviors are repeated and unsuccessful behaviors are avoided. The data structure is fixed and each algorithm is hard-coded. This means that the AI is only capable for learning from the criteria that it is programed to evaluate. This means that the programer either gave it the criteria to evaluate its own sense of self, or he did not. There is no case where a practical AI would accidently suddenly learn self-awareness.

Of note: even the human brain, for all of it's flexibility works like this. This is why many people can never adapt to certain situations or understand certain kinds of logic.

So how did people become self-aware, and why is it not a serious risk in AIs?

We evolved self-awareness, because it is necessary to our survival. A human who does not consider his own Acute, Chronic, and Future needs in his decision making is unlikely to survive. We were able to evolve this way because our DNA is designed to randomly mutate with each generation.

In the sense of how this translates to AI, it would be like if you decided to randomly take parts of all of your other functions, scramble them together, then let a cat walk across your keyboard, and add a new parameter based on that new random function. Every programmer that just read that is immediately thinking, "but the odds of that even compiling are slim to none". And in nature, compiling errors happen all the time! Stillborn babies, SIDs, Cancer, Suicidal behaviors, etc are all examples of what happen when we randomly shake up our genes to see what happens. Countless trillions of lives over the course of hundreds of millions of years had to be lost for this process to result in self-awareness.

Can't we just make AI do that too?

Yes, but not like most people imagine it. While you can make an AI designed to write other AIs by doing this, you'd have to watch countless unfit AIs walk off of cliffs, put their hands in wood chippers, and do basically everything you've ever read about in the darwin awards before you get to accidental self-awareness, and that's after you throw out all the compiling errors. Building AIs like this is actually far more dangerous than the risk of self awareness itself because they could randomly do ANY unwanted behavior, and each generation of AI is pretty much guaranteed to unexpectedly, after an unknown amount of time, do something you don't want. Their stupidity (not their unwanted intelligence) would be so dangerous that they would never see wide-spread use.

Since any AI important enough to put into a robotic body or trust with dangerous assets is designed with a purpose in mind, this true-random approach becomes an intractable solution for making a robot that can, clean your house or build a car. Instead, when we design AI that writes AI, what these Master AIs are actually doing is taking a lot of different functions that a person had to design, and experiment with different ways of making them work in tandem to produce a Consumer AI. This means, if the Master AI is not designed by people to experiment with Self-awareness as an option, then you still won't get a self-aware AI.

But as Stormbolter pointed out below, programers often use tool kits that they don't fully understand, can't this lead to accidental self-awareness?

This begins to touch on the heart of the actual question. What if you have an AI that is building an AI for you that pulls from a library that includes features of self-awareness? In this case, you may accidentally compile an AI with unwanted self-awareness if the master AI decides that self-awareness will make your consumer AI better at its job. While not exactly the same as having an AI learn self-awareness which is what most people picture in this scenario, this is the most plausible scenario that approximates what you are asking about.

First of all, keep in mind that if the master AI decides self-awareness is the best way to do a task, then this is probably not going to be an undesirable feature. For example, if you have a robot that is self conscious of its own appearance, then it might lead to better customer service by making sure it cleans itself before beginning its workday. This does not mean that it also has the self awareness to desire to rule the world because the Master AI would likely see that as a bad use of time when trying to do its job and exclude aspects of self-awareness that relate to prestigious achievements.

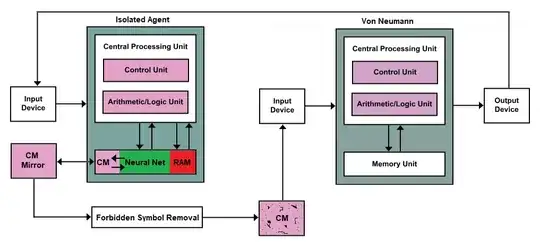

If you did want to protect against this anyway, your AI will need to be exposed to a Heuristics monitor. This is basically what Anti-virus programs use to detect unknown viruses by monitoring for patterns of activity that either match a known malicious pattern, or don't match a known benign pattern. The mostly likely case here is that the AI's Anti-Virus or Intrusion Detection System will spot heuristics flagged as suspicious. Since this is likely to be a generic AV/IDS it probably won't kill switch self-awareness right away because some AIs may need factors of self awareness to function properly. Instead it would alert the owner of the AI that they are using an "unsafe" self-aware AI and ask the owner if they wish to allow self-aware behaviors, just like how your phone asks you if it's okay for an App to access your Contact List.